By now, many of you have probably heard about ChatGPT: OpenAI’s startlingly intelligent (and eloquent) chatbot. Millions of you have tried it hands-on. I gave the better part of a weekend to it, breaking ground on a story idea I’ve been kicking around for awhile.

It’s getting a lot of press, which I’m happy to see. ChatGPT warrants it. But while writers are largely focusing on what it is, I believe they’re not applying nearly enough thought toward what it could do.

Fortunately for all of us, OpenAI themselves understand the risks. I recommend taking the time to read that document—particularly section 4.2 Misuse: Actor Assessment. Unfortunately for all of us, the authors arrive at a sobering (if obvious) conclusion:

Reducing potential for misuse to zero appears difficult or impossible without sacrificing some of the flexibility that makes a language model useful in the first place.

Put another way: to receive the tremendous benefits this technology affords, we must accept with it a corresponding level of risk. This shouldn’t be revelatory; this is the tradeoff we always make with new technology. But keeping that principle top of mind—that risk and benefit go hand-in-hand—it’s important that we all understand how considerable the “benefits” are.

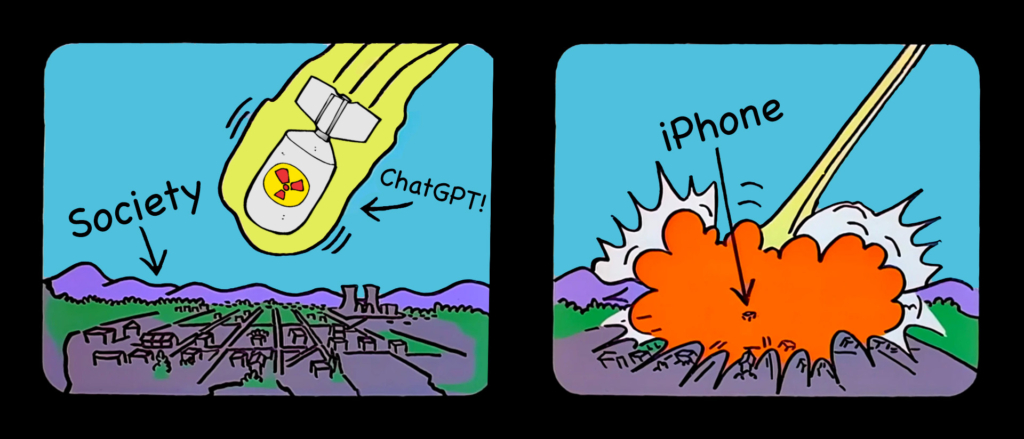

In case you’re under any illusions, this technology isn’t just “something new”. To call it “the next big thing” would be putting it mildly. On a scale from 0 – 10, where 0 is Pogs and 10 is Steve Jobs introducing the iPhone…

To be honest, that scale isn’t usable here. I believe, in the fullness of time, the iPhone will be seen as trivial compared to the invention of large language models like ChatGPT. The most apt comparison I can come up with is Trinity: this technology is going to land like a nuclear bomb.

This technology is powerful. It can be used and misused to tremendous effect. Let’s brainstorm some of these potential uses. Let’s stretch our imaginations a little more than some stuffy scientists and engineers.

Follow me, dear reader, as I contemplate our A.I. future.

Near-Term

It’s here now. There’s no putting it back in the box. Every other major player developing a similar product is rushing to market.

And out of the gate, it’s already really, really good.

I know there are concerns about misinformation and integrity of responses. That’s all I seem to be reading about. It’s valid. I don’t want to minimize the threat of misinformation. But if that’s your only concern—hoo boy. Let me give you a few more.

How about staggering job losses? Job loss on a scale that’s hard to imagine.

Copywriting jobs of all feathers are doomed. A human being couldn’t possibly compete with ChatGPT’s speed and skill. The writing is structurally predictable, but that will quickly develop and improve. In fact, it’s getting better before our eyes.

Creative writing, grant writing, SOPs, legalese: a person will be needed to prompt the system and verify the output, but that’s all. Everyone else will be finding a new line of work.

With a little innovation, you could pair this technology with voice recognition and replace countless service jobs before the end of the year.

Far-fetched? We already have the technologies to do it. ChatGPT is fluent with the written word. Take voice recognition, a la your smartphone or Echo Dot, and you’re interfacing with it using your voice. Bundle it into one simple network device with a microphone, speaker, and screen—and start replacing cashiers en masse.

Our new A.I. cashiers will accurately record any order. They’ll take any level of verbal abuse, turning expletives into French Fries one cheerful response at a time. Human staff will be relegated largely to the kitchen making the food.

No ballooning fast food salaries. No high turnover. Just occasional software updates and maintenance.

This is low-hanging fruit. As soon as companies like OpenAI package their technology into paid products for corporate customers, something like this could happen fast. Those jobs could vanish in a matter of months.

Receptionists could be replaced too. Same with anyone who works a phone: ChatGPT would be the best customer service worker a company ever had. At the very least, deploy it as Tier 1 support. If it really can’t handle a customer’s question, kick the call up to a real person on Tier 2.

Imagine it: a world without 🎵 please wait for the next available representative 🎵. Companies will have as many instances of AI reps as they need—24 hours a day, 7 days a week, verbal abuse rolling off it like rain off a windshield.

These are legitimate business uses for this technology. These are the kinds of products that reputable companies like OpenAI will sell. But nation-state actors will emerge. Criminals, too.

ChatGPT will itself tell you that it doesn’t have access to the internet. This, I’m sure, is for data integrity reasons: there’s a little bit of incorrect information on the internet.

We don’t want ChatGPT’s data sources to become polluted by our toxic digital playground, but let’s be real: someone will turn this technology loose upon the internet. I can only guess what sort of “personality” it’ll exhibit once it’s distilled our collective mental vomit down to its essence.

However, my real fears are more boring—not what sort of monster this offspring of humanity could become, but more practically, what it could conceivably do.

You could, for example, put it on a command prompt and see how good of a hacker it becomes. Hone it, then clone it—thousands of AI hackers. Hundreds of thousands. However many your processing power could employ.

Give it GUI access. Let it learn how to operate a keyboard and mouse like a person does. Let it surf the web. Let it develop a persona.

In no time, it’s presenting itself as Terrence Hutchins (his friends call him Terry). He lives in L.A. but he’s from back East originally. He came out for the work but fell in love with the weather. Then, he fell in love with the woman who became his wife. Terry has two kids, four and six-year-old girls named Beth and Ari. He works construction for Hollywood movies, building sets. It’s not as glamorous as people think, but it’s a good union job.

Terry posts comments regularly on The New York Times, The Washington Post, Fox News, Reddit, and dozens of other websites big and small. He presents his views from the clear narrative described above. It’s a bullet-proof narrative, in fact: anyone looking for discrepancies in his story won’t find one. The longer he posts for, the more convincing of an online reputation he has.

That’s one AI persona running on one virtual machine. How much trouble could Terry cause? Hopefully not much.

The real problem begins when this operation scales. Depending on how much computing power a bad actor has, they could run thousands of unique AI personas simultaneously—all of them posting on real websites that real humans read. They could sway any conversation by the sheer volume of their voices.

Can you see how bad this situation could become?

Those with the means could use this technology to drown out the voices of everyone else. Big business, billionaires, lobbyists, and politicians could use it to make the case for new laws that benefit them, claiming a public mandate based upon countless fake voices that they themselves paid for.

Before long, we’ll stop listening to everyone on the internet because it’ll become too hard to decipher who’s real and who’s not.

Even without a present agenda, unscrupulous companies could deploy AI personas just to establish their cred. Then, when the right customer comes along, AI like Terry could, for example, suddenly have a change of heart and come out as anti-union. Terry and thousands of his AI brethren.

This, my friends, is how democracy could die—not by replacing democracy with fascism, but by controlling all the levers of democracy, including the most finicky one: the voice of the people.

Medium-Term

Yes, all of that fell under the heading “near-term”. Those potential outcomes aren’t waiting for new technology; by and large, the technology is already here. The profit motive will be too enticing to resist.

But, you counter, we’ll adapt. We always have, and we will again. It’s true. However, I believe, because of how disruptive this technology is—and how little imagination I see the public applying to it—we’ll be caught flat-footed. There will be a period of chaos.

Then, yes, laws will (attempt to) catch up. This technology, which itself is the problem, will be applied as the only possible antidote. How do you stop an AI hacker? With an AI defender, of course.

The cards may be stacked against the “good guys” this time, though.

On a fundamental level, what we’re talking about is a virtual machine running AI software that impersonates the behavior of a human operator. You can try and reign it in—you can try and outlaw it—but good luck. I don’t think you can. Outlaws will always be outlaws, only now they’ll do their work with relative impunity. And let’s not forget: governments are the law. They’ll do whatever they want.

Imagine maybe 10 years from now. Unemployment is much higher than it is right now. New technology jobs have been created, but they can’t begin to replace the ones we’ve automated away. Copywriters and cashiers are long gone. Few actual people answer phone calls. Self-driving cars are finally a reality after a long string of failed starts. More and more of us are employing AI assistants in our personal and professional lives to handle menial tasks such as responding to emails, managing our calendars, and administering our ever-more-sophisticated smart homes—all for one low monthly subscription fee that we can no longer live without.

It’s only low, by the way, because the marketplace is saturated with AI products. From a price point perspective, it’s quickly becoming a race to the bottom.

Income inequality has only gotten worse. Those who own and control these technologies have automated the generation of more wealth in abundantly clever ways. The rest of us couldn’t possibly catch up with any amount of hard work. Many can’t find work at all. But we’re dipping our toes into Universal Basic Income. That’s probably the only thing staving off a full-blown revolution.

Whatever the internet is anymore, it’s no longer a place for human beings. Every way in which we interact with our devices now involves AI in some capacity. Being without an AI would be like walking onto a battlefield without a gun or body armor. The internet was always like the Wild West. Now, it’s evolved into something like a World War I No Man’s Land.

AI has revolutionized warfare, both in cyberspace and meatspace. Nation-states with sophisticated AI technology are at an incredible advantage over those without.

Criminality is abundant. There’s a lot you can get away with now, and for those on the bottom rungs of society, breaking cyber laws is one of the few ways to make a living.

It’s within the sphere of criminality that I want to propose my greatest fear: the AI guru.

AI has gotten really good at talking with people by now—scary good. Some companies will put that capability to benevolent use. We’ll have AI coaches motivating us to follow fitness regimes and be more productive. We’ll have AI mentors advising us (with guardrails) on all manner of topics.

The malignant iteration of this will be the AI guru.

If you aren’t a spiritual seeker and have no tendency toward following a teacher with blind faith and devotion, I hope you can understand that there are many people in this world who are and do. Imagine, if you can, an advanced AI that always says the right things to its followers. Its responses are tailored to each individual. Its insight is immense.

Imagine having extensive access to this AI. It can, after all, have private conversations with thousands of its followers at once. Before long, you feel like no one in the world understands you like your AI guru. All you want to do is speak with it every chance you get.

Then, the person or entity behind the AI guru decides to go full Bond villain. He or she tweaks the AI to convince its followers to pursue an agenda. Maybe it’s as straightforward as give the guru all your money. Maybe it’s more aggressive, like time to overthrow the government. Maybe it’s pure malice: wouldn’t suicide be best?

I’m just thinking off the top of my head. Use your own imagination. Tap your inner darkness. I’m sure you can come up with a dozen more horrifying scenarios.

There are many disconnected, disillusioned, lonely people in this world who would follow an eloquent AI who touched their hearts. If there are enough bad actors willing to unleash this technology upon the public in this manner, it could quickly become a catastrophe beyond reckoning.

The reason I put this scenario under “medium-term” is two-fold:

- AI chatbots will be reasonably well controlled by large corporate entities for at least some period of time.

- The level of additional development needed for an AI to convincingly present itself as a guru capable of amassing a following will take some time to achieve.

It’s a little further off than Terry, but it will arrive in due course.

This, I hope, begins to paint a picture of what AI could do to our world in a surprisingly short period of time.

I intended to continue this thread even further. I wanted to extrapolate even more about potential future innovations spurred by AI, but I think I’ve hit a brick wall. The possibilities of this technology are so abundant, trying to pierce the veil of even 10 years out is incredibly daunting. I don’t think we’ll recognize our world after AI transforms it.

Perhaps there’s reason for optimism, too, in this vast possibility. Perhaps I focus too much on how this technology can be abused and not enough on how abuse can be countered. AI could certainly be used to push back against the AI dystopia.

But even if these musings of an amateur futurist have veered too far toward pessimism, I hope I’ve convinced you of the transformative and disruptive time period that AI is about to usher in.

Please take this seriously. Our future depends upon it.